Working with Community College Partners to Create AR Astronomy Learning Activities That Are Collaborative and Impactful

by Robb Lindgren and James Planey

Key Ideas

- Partner contributions led to two key shifts in the goals of the learning experience: (1) from an applied task to foundational astronomy skills; and (2) from separate roles for students, toward giving small groups of students an authentic challenge.

- Developers created a narrative-centered learning experience in which students could choose AR for its unique benefits or continue with familiar software.

- Transformation occurs when researchers honor practitioners’ educational knowledge and design ideas (including pivoting away from original research proposal commitments), and yet also point out to practitioners insights that emerge from the research.

Background

The CEASAR Project (Connections of Earth and Sky with Augmented Reality) (NSF grant IIS-1822796) was conceived as a way to integrate cutting-edge technologies, such as augmented reality (AR) headsets, into laboratory activities for students. Headset-based augmented reality has recently become accessible in the form of technologies like the Microsoft HoloLens. Applications of the technology have so far largely been for medical, engineering, and design students in university contexts.

The project involves a partnership between researchers at the University of Illinois Urbana-Champaign, technologists and curriculum developers at Concord Consortium, and astronomy instructors at institutions of higher education in the Champaign-Urbana area, including Parkland Community College. We began the partnership by reaching out to the director of the Staerkel Planetarium located on the Parkland College campus in Champaign, Illinois. Shortly after the CEASAR project was awarded, the director announced his retirement and he introduced us to two Parkland astronomy instructors who for several years have worked with us closely to explore the capabilities of headset-based AR, design the CEASAR environment to broadly support their learning goals, and then utilize the platform in their courses.

The CEASAR project aims to create an exemplar for a vision of broader and more diverse use of AR, such as with community college students who would otherwise not have access to the technology. The goal was to explore how immersive technologies could potentially add value to collaborative learning activities and reinforce knowledge and skills that were consistent with already established course objectives. Ostensibly, the aim was to bring the immersive experience of the planetarium to the social environment of the astronomy classroom by leveraging the specific affordances that AR headsets provide in allowing for simultaneous interaction with digital content and other students.

Story of the Partnership

The CEASAR project was conceived as a design-based research project. We expected that when we started working with our practitioner partners, our initial assumptions and priorities would shift. This certainly proved to be the case, and the best two examples of this were (1) in our approach to designing the collaborative tasks and (2) what we believed the technology could do to support the way the groups work together.

We knew from the outset that we wanted to give students ample space to explore the simulated night sky environment and to motivate them to investigate the spatial relationships between the Earth, the sun, and other visible stars in the sky. We initially proposed to accomplish this by creating ‘solar engineering’ tasks, such as determining optimal locations and angles for installing solar panels. We also proposed that all students would be wearing AR headsets but that they would be situated into different roles which meant access to different digital resources and personalized in-simulation supports. Both of these design goals were challenged once we entered into concerted discussions with our astronomy instructor partners. The partners had done similar engineering activities in their courses previously, but often near the end of a course or in more advanced courses.

After collectively reviewing course progressions and learning goals, our partners suggested more purposeful and problem-centered activities. These activities emphasized fundamental astronomy skills of observation and calculation, such as establishing cardinal coordinates and determining one’s location based on a view of the night sky. The instructors also nudged us away from creating explicit roles for our learners. They argued that explicit roles could inadvertently lead to siloing of knowledge, limiting the flexibility of the tasks to adapt to changes (e.g., absences) and discouraging collaboration. They instead suggested an emphasis on designing a clear and accessible problem that all members of a group could pursue using the available devices. So rather than giving all members of the group an immersive headset, we would develop a simulation that could be viewed either within an AR headset or using a tablet computer; students within a particular group could choose which devices to use and when. This approach addressed another concern expressed by our partners that equipping all students in a class with an AR headset was neither cost-effective nor particularly desirable given space constraints and individual students’ preferences (e.g., some students not wanting to wear a headset, simulation sickness concerns, or physical limitations). These early interactions with our instructors were absolutely critical and set the stage for CEASAR to pay attention to the pragmatics of classroom implementation as we continued to prototype and test our technologies.

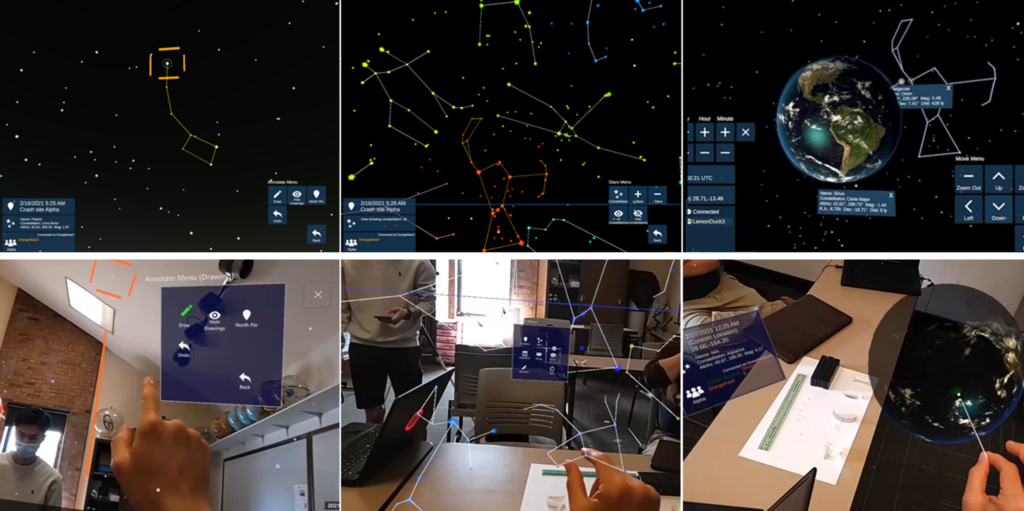

We tested the resulting design in numerous undergraduate classes. The design was a locally networked simulated night sky that could be accessed from multiple devices, both immersive headsets and tablet computers. Networked software enabled all group members to annotate and highlight information in a way similar to a collaborative online document. Prior to the commercial release of the Microsoft HoloLens 2, we used VR headsets for early pilots, but in all iterations, there were two tablet computers and one immersive headset available to each group of three to four students (Figure 1). Either device could access CEASAR’s star and constellation database, with the ability to change location and time as needed, and both devices allowed students to make annotations on the night sky (i.e. sketching lines), highlight specific constellations, share location information, and jump to another group member’s location and perspective as they work. While both tablets and headsets allowed for some similar views and access to commensurate information, the devices afforded different kinds of interactions. For example, the AR headset enabled hand and gesture detection, and this allowed students to “air-tap” or pinch to select and reposition holograms. They could also perceive an embodied first-person “horizon view” of the sky as if standing on Earth. Figure 2 shows the three simulation representations available in the system and a comparison of the three views in the tablet versus the AR headset.

Figure 1. A group of 3 students working on the Lost at Sea task using the CEASAR simulation platform (1 AR headset and 2 tablets).

Figure 2. Left: “Horizon view” provides the user with a first-person view of the sky as if standing on Earth. Center: “Star view” removes the horizon limitations from the perspective, giving

the user access to the full celestial sphere and constellation catalog. Right: “Earth view” places the user in orbit above the earth with the ability to place location pins with which to change their position on the Earth or confer with other users about potential sky viewing locations. The interface menus for the tablet (top row) are anchored to the bottom left and right of the screen; for the AR user (bottom row) they can use hand gestures to move the interface panels around in 3D space to locations of their choosing.

The specific task we used for data collection, which was also co-created by the research team and instructional partners, was a multi-part problem-solving narrative called “Lost at Sea” that prompts students to use star data to find their location on Earth. In the narrative that was used to frame the problem, a space capsule has crashed down at night in an unknown ocean location and students are tasked with using their night sky knowledge to determine the approximate latitude and longitude of the crash. Broadly, the task was designed to contextualize and promote software-group interactions by establishing the need to use the simulation across both AR and tablet interfaces to search, share, and establish consensus around pieces of information that help attain task objectives. The core learning goals were set after reviewing and discussing the curriculum of the cooperating teachers’ introductory astronomy courses, with the aim to take foundational skills (i.e., observing and identifying stars and constellations in the night sky) and extend those skills to tasks that pushed students beyond the content engaged in the course (i.e., estimating latitude and longitude based on star observations).

Working with our instructional partners made salient many of the practical issues of using immersive technology in real educational contexts, which led us to design in a way that was maximally flexible and focused on attainable learning goals. For one, it provided valuable information about student skills and preferences that we did not anticipate, such as students’ willingness to use headsets for a lab activity and implicit comparisons that students would make between the CEASAR platform and other astronomy software packages. This information made it possible for us to design an accessible immersive simulation experience that enhanced what was possible with laptop astronomy software and which students were likely to choose to use during the task because it offered interactions and information that tablet and laptop technologies do not have. Second, the partnership strongly improved our ability to navigate the inherent tension between “futuristic” and “realistic” technology designs. Without frequent check-ins with our community college partners, we could have veered toward a design that overprivileged the AR perspective. This would not have met the needs of learners who could not, or did not want to, engage with the AR technology. Because of our partnership, we are much more confident that we have implemented a design solution that is transferable to other tasks and even other science domains and educational contexts.

The rapport that developed between the research team and the instructional partners meant that design discussions were productive and grounded in the reality of achieving instructional goals. For example, very early in the design process, one of the instructors described the challenge that many of his students have in “adopting an effective visual perspective” for making sense of large-scale phenomena such as the movement of the Earth relative to the sun. He thought a technology such as AR could deliver an embodied perspective that would allow students to make sense of this positioning relative to one’s location on Earth as opposed to thinking of it as an abstract spatial positioning problem. We were able to create a concrete design based on the instructor’s intuition that was well-received by students. At the same time, our instructor collaborators allowed the research team to push for interaction schemes, data presentations, or task goals that extended at times beyond established classroom expectations or learning goals, with the shared understanding that the value in exploring a particular task or design decision was worth the complication to the instructional process.

The challenges we have faced in our partnership are relatively minor compared to some of the pragmatic challenges we have encountered, such as a delayed rollout of our preferred hardware platform (the HoloLens 2) and limitations to our ability to test the intervention given the pandemic. We were able to overcome the initial limited access we had to the HoloLens and begin to engage in productive task and technology development through a combination of paper-based task prototypes as well as the development of a VR software prototype. These allowed us to bring both the task as well as similar immersive technology into the classroom and begin to gain critical knowledge about the classroom implementation and data collection processes. Once the AR headsets were finally in the hands of the research team, we already had gained a solid understanding of how students approached the Lost at Sea task as well as the logistics of deploying and managing immersive technologies in the classroom. This gave the entire CEASAR team the confidence to make innovative changes to the system to support AR, as well as integrate Lost at Sea as a full lab activity within the curriculum.

Still, there were occasionally times when the objective of surfacing clear research findings was not aligned with the immediate instructional goals of our partners. For example, we found over several iterations that students tended to be more successful with the CEASAR activity if they had time to orient and explore the novel technologies that comprised the CEASAR platform. However, these kinds of introductory activities took time, and it was not always easy to find available time in a packed semester of content and labs. We found having an understanding of our partners’ course schedule requirements and aligning those with data collection plans early in the research process was helpful in avoiding these challenges. It also required mutual flexibility, such as researchers being willing to collect data in non-optimal configurations or instructors allowing for alternative activities or using alternative tools to try and reach the same learning goals.

Lessons Learned

First and foremost, our experiences on the CEASAR project have strengthened our belief that practitioner partners on technology innovation projects need to be positioned as co-creators and as designers as opposed to simply being users or contexts for testing. Even if the partners are not intimately involved in the technology development, the ultimate product of these kinds of design endeavors are learning experiences that go far beyond simple interface engagements; they are complex, system-level interactions that necessitate deep content-expertise, pedagogical knowledge, and an understanding of how small groups of students will perceive the task and work together.

Second, the design focus has to be broader than the technology. Thus, when we worked with instructional partners on the CEASAR project, rarely did we focus only on what the technology should look like or what it would do. Rather, we discussed what we wanted the students to see and what kinds of problems we wanted them to struggle with, and what we wanted them to discuss with each other. Near the end of the project, we began referring to our learning goals in terms of creating “shared representational spaces” where students could productively exchange knowledge and make meaningful references to important artifacts and variables across technologies.

Third, research teams should spend time reconciling perspectives. In many ways, our goals for the students who used CEASAR were similar to our own design process; in both cases, a team benefits by reconciling diverse perspectives to come to a shared understanding of a complex environment.

Next Steps

Based on our success in this project, we will continue to center partnerships in our research. Further, the ways we center partnerships, such as how we frame team meetings or how we empower our partners to be creative and assertive with ideas, will surely continue to evolve. Our partnership work on the CEASAR project has affirmed the transformative potential of strong and equitable collaborations, and it has raised the bar for us on what kinds of partnerships we aspire to have in our work.