Designing the Embodied Coding Environment: A Platform Inspired by Educators and Learners

by Ying Choon Wu, Tommy Sharkey, and Timothy Wood

Key ideas

Partners provided a new vision of a coding platform grounded in students’ creativity, design thinking, and desires for freedom. Building from visualizations and no-technology classroom activities enabled a shift away from syntax and toward a problem-centric curriculum. “Ecological validity” in educational research means empowering partners to transform research goals.

Background

An Embodied, Augmented Reality Coding Platform for Pair Programming (NSF grant IIS-2017042), based at University of California San Diego, developed an Embodied Coding Environment (ECE) that enables users to create three-dimensional computer programs implemented in virtual reality (VR). The ECE is a programming language created to support learning of computational concepts by leveraging the perceptual and sensorimotor affordances of acting in a 3D spatial medium. Specific design features of this system were motivated by outcomes from a need-finding study (Sharkey et al., 2022) conducted during the incipient stages of the project.

The partners on this project include several local high school CS teachers in San Diego County, whose role was to provide a better understanding of current challenges to CS learning and classroom practices, as well as describing their visions of ideal pedagogical tools and platforms. Another partner was our local chapter of the Computer Science Teachers Association (CSTA), through which the research team organized demos of our prototypes and held informal development discussions with educators throughout San Diego County.

Story of the Partnership

Our CS teacher partners were experts in what high school students need to gain an understanding of computational concepts. Working with our CS education partners revealed the importance of giving learners access to tools that support planning and critical thinking during the process of developing a computer program. This discovery resonated with the outcomes of formal studies of computer science novices (Robin et al., 2003). Virtually all educators who have offered the research team guidance expressed a desire for technologies that empower learners’ creative processes, giving them “freedom to build” and scaffolding needed to develop an approach that is grounded in “design thinking.” Through these conversations, we developed a new vision of a coding platform where students would be able to visualize their emerging ideas as pictures and engage in rapid prototyping of solutions in response to authentic problems. In this vision, learning tools would offer assistance with high-level aspects of a problem and boost learners’ own metacognitive abilities. Preferred programming tools would also allow learners to draw connections between domains, such as block- versus text-based coding, or a piece of code and its output.

Our partners helped the research team place competencies in the foreground and to keep details like syntax in the background. For example, our partners helped us see the degree to which the time and effort it takes to learn different syntax for each code distracts from their desired teaching objectives. We also learned from our partners that the challenges inherent in learning the syntax of various coding languages were frequently viewed as distractions from obtaining foundational computational competencies and programming abilities. As one high school teacher stated, CS tools should allow learners to spend “more time on the problem and less time on syntax.” Others pointed out that in the classroom, they guide students first to “solve the problem without even thinking about coding,” or to integrate their higher-order understanding of the task with lower-level knowledge of syntax by writing about how different elements of their code interact. Further, another educator expressed the value of drawing connections between specific elements of code in a computer program and its output, as in the case of relating a robot’s behavior to the code that controls it.

Another key finding that emerged from conversations with CS educators is their regular reliance on metaphors and visualization, which educators often use to teach challenging abstract computational concepts. Teachers described many forms of roleplay and enactments, such as organizing students to act out the changing values of a temporary variable, to play musical chairs to understand for-loops, and to imagine themselves as functions represented by paper airplanes that they toss between one another. Additionally, our partners reported their frequent use of pictures, diagrams, and flowcharts to communicate concepts and problem solve, as well as their encouragement to students to use visuals.

Through these sustained conversations, our research group realized that visualizations and body movement play several different roles in the process of developing code. They can support planning and design, problem solving and debugging, learning, and communication. Accordingly, tools that support drawing, modeling, and gesture capture are key features of the ECE (Twomey et al., 2022). With analogy to existing 3D modeling and design software packages, such as Gravity Sketch or Tiltbrush, the hand controller can be used as an instrument to create and place 2D drawings anywhere in the environment (Figure 1 left). Moreover, simple 3D models can be generated by extruding 2D objects into a third dimension (Figure 1 middle and right). Alternatively, free assets and models can also be imported from online libraries. Finally, using the gesture capture tool, the path and directionality of the controller in the user’s hand can be recorded and visualized in the environment (Figure 2).

Figure 1. Left: The 2D drawing tool supports free-form sketching and writing. Middle and Right: Procedural 3D models created with the extrude tool. The user begins by defining a 2D shape and then extending it into three dimensions.

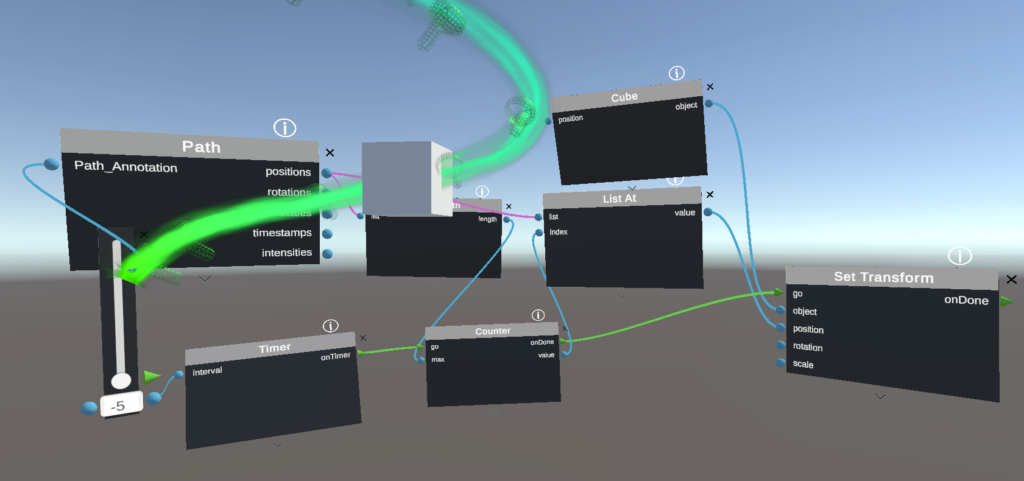

Figure 2. The gesture capture tool records the movement of the user’s controller and visualizes it as a trajectory through 3D space. The direction of movement is indicated by the gradation of color from bright to dim. Green lines between nodes indicate event connections, while blue and pink ones indicate different types of data connections.

The suite of tools in ECE serves many key functions within the ECE. First, it supports rapid prototyping and free-form whiteboarding that can foster planning and design in the early stages of code development. Secondly, it supports a radically different method of annotating and organizing code relative to traditional text-based code produced on a computer monitor, which is usually arranged in a linear vertical fashion. Lines of code within traditional text-based computer programs are often ordered in sections according to their function and annotated by comments indicating their function. Embodied coding, on the other hand, enables learners to use their sketches, gestures, models and text as annotations that anchor different segments of their computer programs to different locations in the environment (Figure 3 left).

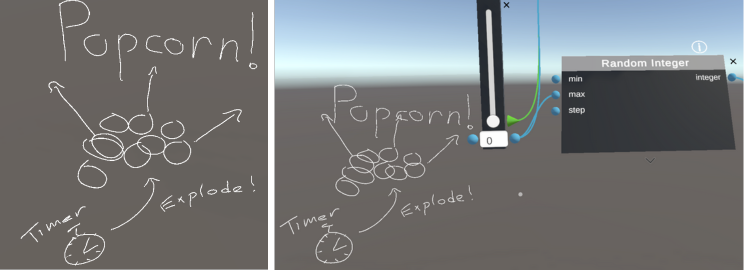

Imagine, for instance, one’s goal is to simulate popcorn bursting. Initial ideation might prompt the user to realize that key programmatic elements will include code to generate representations of popcorn, a timer to control when the bursting will occur, and a random explosion generator. Using the annotation tools, the user is able to quickly sketch out these elements and their interrelationships (Figure 3 left). Next, the necessary nodes and connections are assembled and can be placed near the appropriate annotation (Figure 3 right).

Figure 3. Left: Annotations delineating the key elements of a program designed to simulate popping popcorn. Right: Code is arranged in the proximity of the relevant annotation.

Lessons Learned

Through this partnership, we learned that we have to change the design focus of the project to serve educator goals (e.g. to focus on competencies) and practices (e.g. leveraging metaphor and embodied learning). We also learned about unmet educator needs and now plan to design additional resources for the educators. Finally, we came to a deeper understanding of what “ecologically valid” design entails.

Our partners have contributed invaluable insight to guiding the evolution of the design and implementation of the ECE. Their suggestions motivated our team’s focus on creating built-in features to support problem-solving and learning by simplifying the debugging process and helping learners to ground specific elements of code that they create within a more global understanding of their program. Further, conversations with our educational partners revealed the richness and diversity of metaphors and techniques that are used in the classroom to reason about abstract computational concepts. For this reason, ECE tools have been designed to be used in flexible ways that can allow idiosyncratic mappings to be captured between computational concepts and sensorimotor experience. For instance, if a specific individual conceptualizes a loop as a circular structure or an if-statement as a forked path, annotations can be created that reflect this person’s particular understanding.

The voices of our community partners have also impacted many practical considerations surrounding the implementation of the ECE. For instance, our team opted for a flow-based coding system to make learning the syntax of the programming environment more intuitive – as educators tended to view the particularities of learning a new syntax as a distraction from the process of learning basic computational concepts. Central to the ECE is a library of nodes, which can be thought of as functions, and by creating a pathway of node-based functions, various programs can be created that generate, manipulate, animate, constrain, or transform objects in the environment. Event connections create the order of execution and can be user-generated events, such as interacting with a UI element, controller events, or timer-based events. Event connections are green and show the general structure of the program (Figure 2). Data connections are blue (or pink for arrays) and transmit program values between nodes (Figure 2). Data and event connections define dependencies that are needed before a node runs on the main event pathway.

Working with our educational partners has served to reinforce our view of the value of ecological validity in design. Ultimately, reaching any particular community of learners – in this case, CS novices from backgrounds that are typically underrepresented in STEM – requires the designer and researcher to gain an understanding of the dynamics that inform learning and motivation within that community. While our educational partners have offered crucial insights in this regard, we also hope to deepen our knowledge of these dynamics through direct exchanges with underrepresented learners.

Next Steps

In conversation with our strategic partners, we are currently developing a library of tutorials and guided activities for use in the classroom or after-school contexts. One challenge to this process stems from the question of engagement; that is, what kinds of content and projects are most likely to motivate learning through the use of the ECE? We are currently developing learning materials with particular attention to the empowering potential of computing, which can enable users to adopt new perspectives and insights that may not have been imaginable on one’s own. Our partners have pointed out that focusing exclusively on game development can lead to unintentional biases contributing to gender inequity, as boys tend to enjoy games more than girls. Instead, several educators suggested creating content that is oriented around authentic global or community problems and the role of computing in solving them. Examples could include exploring how 3D modeling can be used to develop solutions to climate change or the COVID-19 pandemic. Additionally, one of our partners suggested applying embodied coding to tackle themes related to the UN’s sustainable development goals. Moving forward, as our team progresses through the transition from creating a functional programming environment to making it usable in diverse learning contexts, we will continue to explore what captures the interest of the underserved learners who are the target of this study.

Moving forward, we envision that the role of our partners will evolve as the focus of our project expands from design and development to testing in real-world learning environments. To navigate this transition, we are leveraging the expertise of our educational partners to integrate the ECE into the classroom and after-school activities. We are also forming new partnerships with a focus on reaching learners from underrepresented backgrounds. Our ultimate goal is to test the impact of Embodied Coding on motivation, interest, and computational concept learning in novice coders at the secondary school level.